The V-Model is a model used to describe testing activities as part of the software development process. The V-Model can be interpreted as an extension of the Waterfall development model, which describes the testing activities as one of the last steps in a sequential development process. However, in contrast to the Waterfall model, the V-model shows the relationship between each development phase and a corresponding test activity.

Even though the V-model is criticized because it simplifies the software development process, and because it does not completely map onto modern Agile development processes, it does include a number of terms and concepts essential to software testing. These terms and the concepts behind them can be leveraged to find a proper structure for the testing efforts in your software project. Besides, the V-Model can be used as a model for each iteration of an Agile project.

Introduction to the V-Model

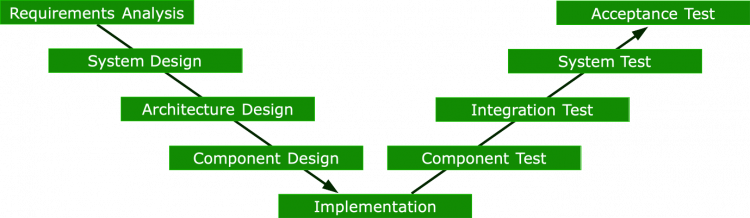

The main idea behind the V-model is that development and testing activities correspond to each other. Each development phase should be completed by its own testing activity. Each of these testing activities covers a different abstraction level: software components, the integration of components, the complete software system and the user acceptance. Instead of just testing a monolithic piece of software at the end of the development process, this approach of focusing on different abstraction layers makes it much easier to trigger, analyze, locate and fix software defects.

The V-Model describes the development phases as the left branch of a "V", while the right branch represents the test activities. Such a V-Model is shown in the following illustration:

Development Phases

The development phases from the V-model are commonly known either from the Waterfall model or as logical phases of real-world development pipelines. We will go through each phase.

Requirements Analysis

First, the requirements of the software system have to be discovered and gathered. This is typically done in sessions with users and other stakeholders, for example, through interviews and document research. The requirements are defined more precisely through the analysis of the information gathered.

The software requirements are stored persistently as a high-level requirements document.

System Design / Functional Design

Based on the output from the Requirements Analysis, the system is designed at the functional level. This includes the definition of functions, user interface elements, including dialogs and menus, workflows and data structures.

Documents for the system tests can now be prepared.

If the test-driven approach of Behavior Driven Development (BDD) is followed, the feature specification is created. This might be a surprise because BDD is strongly connected to the component level. However, the Squish BDD feature introduced BDD to the functional level.

Architecture Design

The definition of the functional design is followed by the design of the system architecture as a whole and its separation into components. Along this phase, general component functionality, interfaces and dependencies are specified. This typically involves modeling languages, such as UML, and design patterns to solve common problems.

Since the components of the system and their intersection are now known, the integration test preparations can be started in this phase.

Component Design

The next phase is about the low-level design of the specific components. Each component is described in detail, including the internal logic to be implemented, a detailed interface specification that describes the API, and database tables, if any.

Component tests can be prepared now given that the interface specification and the functional description of the components exist.

If the test-driven approach of Behavior Driven Development (BDD) is used for the component level, the feature specification for the individual components is created.

Implementation

The implementation phase is the coding work using a specific programming language. It follows the specifications which have been determined in the earlier development phases.

Verification vs. Validation

According to the V-Model, a tester has to verify if the requirements of a specific development phase are met. In addition, the tester has to validate that the system meets the needs of the user, the customer or other stakeholders. In practice, tests include both verifications and validations. Typically, for a higher abstraction level, more validation than verification is conducted.

Test Stages

According to the V-Model, test activities are separated into the following test stages, each of them in relation to a specific development phase:

Component Tests

These tests verify that the smallest components of the program function correctly. Depending on the programming language, a component can be a module, a unit or a class. According to this, the tests will be called module tests, unit tests or class tests. Component tests check the output of the component after providing input based on the component specification. For the test-driven approach of BDD, these are feature files.

The main characteristic of component tests is that single components are tested in isolation and without interfacing with other components. Because no other components are involved, it is much easier to locate defects.

Typically, the test implementation is done with the help of a test framework, for example JUnit, CPPUnit or PyUnit, to name a few popular unit test frameworks. To make sure that the tests cover as much of the component's source code as possible, the code coverage can be measured and analyzed with our code coverage analysis tool Coco.

Besides functional testing, component tests can be about non-functional aspects like efficiency (e.g. storage consumption, memory consumption, program timing and others) and maintainability (e.g. code complexity, proper documentation). Coco can also help with these tasks through code complexity analysis and its function profiler.

Integration Tests

Integration tests verify that the components, which have been developed and tested independently, can be integrated with each other and communicate as expected. Tests can cover just two specific components, groups of components or even independent subsystems of a software system. Integration testing is typically completed after the components have already been tested at the lower component testing stage.

The development of integration tests can be based on the functional specification, the system architecture, use cases, or workflow descriptions. Integration testing focuses on the component interfaces (or subsystem interfaces) and is about revealing defects which are triggered through their interaction which would otherwise not be triggered while testing isolated components.

Integration tests can also focus on interfaces to the application environment, like external software or hardware systems. This is typically called system integration testing in contrast to component integration testing.

Depending on the type of software, the test driver can be a unit test framework, too. For GUI applications, our Squish GUI Tester can drive the test. Squish can automate subsystems or external systems, e.g. configuration tools, along with the application under test itself.

System Tests

The system test stage covers the software system as a whole. While integration tests are mostly based on technical specifications, system tests are created with the user's point of view in mind and focus on the functional and non-functional application requirements. The latter may include performance, load and stress tests.

Although the components and the component integration have already been tested, system tests are needed to validate that the functional software requirements are fulfilled. Some functionality cannot be tested at all without running the complete software systems. System tests typically include further documents, like the user documentation or risk analysis documents.

The test environment for system tests should be as close to the production environment as possible. If possible, the same hardware, operating systems, drivers, network infrastructure or external systems should be used, and placeholders should be avoided as far as possible, simply to reproduce the behavior of the production system. However, the production environment should not be used as a test environment because any defects triggered by the system test could have an impact on the production system.

System tests should be automated to avoid time-consuming manual test runs. Since the arrival of image recognition and Optical Character Recognition, Squish can automate any GUI application and trigger and feed non-GUI processes. In addition to verifications of application output through the GUI, Squish can access internal application data available in both GUI and non-GUI objects. Testing multiple applications, even based on different GUI toolkits through a single automated test; embedded device testing; access to external systems like database systems; and semi-automated testing to include manual verifications and validations, complete most of the needs for systems testing.

Acceptance Testing

Acceptance tests can be internal, for example for a version of a software which is not released yet. Often, these internal acceptance tests are done by those who are not involved in the development or testing, such as product management, sales or support staff.

However, acceptance tests can also be external tests, done by the company that asked for the development or the end-users of the software.

For customer acceptance testing, it might even be the customer's responsibility, partially or completely, to decide if the final release of the software meets the high-level requirements. Reports are created to document the test results and for the discussion between the developing entity and the client.

In contrast to customer acceptance testing, user acceptance testing (UAT) may be the last step before a final software release. It's a user-centric test to verify that a software really offers the workflows to be worked on. These tests might go as far as covering usability aspects and the general user experience.

The effort put into acceptance testing may vary depending on the type of software. If it's a custom development, which has been developed for a single client, extensive testing and reporting can be done. For off-the-shelf software, less effort is put into this testing stage and acceptance tests might even be as slim as checking the installation and a few workflows.

Acceptance tests are typically manual tests and often only a small portion of these tests are automated, especially because these tests may be executed only once at the end of the development process. However, since acceptance testing focuses on workflows and user interaction, we recommend using Squish to automate the acceptance tests. BDD can be used as common language and specification for all stakeholders. Also, think about the effort that has be put into acceptance tests of multiple versions over the software lifecycle, or the many iterations in Agile projects. In the mid- and long-term, test automation will save you a lot of time.