Qt Graphics with Multiple Displays on Embedded Linux

September 19, 2016 by Laszlo Agocs | Comments

Creating devices with multiple screens is not new to Qt. Those using Qt for Embedded in the Qt 4 times may remember configuration steps like this. The story got significantly more complicated with Qt 5's focus on hardware accelerated rendering, so now it is time to take a look at where we are today with the upcoming Qt 5.8.

Windowing System Options on Embedded

The most common ways to run Qt applications on an embedded board with accelerated graphics (typically EGL + OpenGL ES) are the following:

- eglfs on top of fbdev or a proprietary compositor API or Kernel Modesetting + the Direct Rendering Manager

- Wayland: Weston or a compositor implemented with the Qt Wayland Compositor framework + one or more Qt client applications

- X11: Qt applications here run with the same xcb platform plugin that is used in a typical desktop Linux setup

We are now going to take a look at the status of eglfs because this is the most common option, and because some of the other approaches rely on it as well.

Eglfs Backends and Support Levels

eglfs has a number of backends for various devices and stacks. For each of these the level of support for multiple screens falls into one of the three following categories:

- [1] Output management is available.

- [2] Qt applications can choose at launch time which single screen to output to, but apart from this static setting no other configuration option is provided.

- [3] No output-related configuration is provided.

Note that some of these, in particular [2], may require additional kernel configuration via a video argument or similar. This is out of Qt's domain.

Now let's look at the available backends and the level of multi-display support for each:

- KMS/DRM with GBM buffers (Mesa (e.g. Intel) or modern PowerVR and some other systems) [1]

- KMS/DRM with EGLDevice/EGLOutput/EGLStream (NVIDIA) [1]

- Vivante fbdev (NXP i.MX6) [2]

- Broadcom Dispmanx (Raspberry Pi) [2]

- Mali fbdev (ODROID and others) [3]

- (X11 fullscreen window - targeted mainly for testing and development) [3]

Unsurprisingly, it is the backends using the DRM framework that come out best. This is as expected, since there we have a proper connector, encoder and CRTC enumeration API, whereas others have to resort to vendor-specific solutions that are often a lot more limited.

We will now focus on the two DRM-based backends.

Short History of KMS/DRM in Qt

Qt 5.0 - 5.4

Qt 5 featured a kms platform plugin right from the beginning. This was fairly usable, but limited in features and was seen more as a proof of concept. Therefore, with the improvements in eglfs, it became clear that a more unified approach was necessary. Hence the introduction of the eglfs_kms backend for eglfs in Qt 5.5.

Qt 5.5

While originally developed for a PowerVR-based embedded system, the new backend proved immensely useful for all Linux systems running with Mesa, the open-source stack, in particular on Intel hardware. It also featured a plane-based mouse cursor, with basic support for multiple screens added soon afterwards.

Qt 5.6

With the rise of NVIDIA's somewhat different approach to buffer management - see this presentation for an introduction - an additional backend had to be introduced. This is called eglfs_kms_egldevice and allows running on the automotive-oriented Jetson Pro, DRIVE CX and DRIVE PX systems.

The initial version of the plugin was standalone and independent from the existing DRM code. This led to certain deficiencies, most notably the lack of multi-display support.

Qt 5.7

Fortunately, these problems got addressed pretty soon. Qt 5.7 features proper code sharing between the backends, making most of the multi-display support and its JSON-based configuration system available to the EGLStream-based backend as well.

Meanwhile the GBM-based backend got a number of fixes, in particular related to the hardware mouse cursor and the virtual desktop.

Qt 5.8

The upcoming release features two important improvements: it closes the gaps between the GBM and EGLStream backends and introduces support for advanced configurability. The former covers mainly the handling of the virtual desktop and the default, non-plane-based OpenGL mouse cursor which was unable to "move" between screens in previous releases.

The documentation is already browsable at the doc snapshots page.

Besides the ability to specify the virtual desktop layout, the introduction of the touchDevice property is particularly important when building systems where one or more of the screens is made interactive via a touchscreen. Let's take a quick look at this.

Touch Input

Let's say you are creating digital instrument clusters with Qt, with multiple touch-enabled displays involved. Given that the touchscreens report absolute coordinates in their events, how can Qt tell which screen's virtual geometry the event should be translated to? Well, on its own it cannot.

From Qt 5.8 it will be possible to help out the framework. By setting QT_LOGGING_RULES=qt.qpa.*=true we enable logging which lets us figure out the touchscreen's device node. We can then create a little JSON configuration file on the device:

{

"device": "drm-nvdc",

"outputs": [

{

"name": "HDMI1",

"touchDevice": "/dev/input/event5",

}

]

}

This will come handy in any case since configuration of screen resolution, virtual desktop layout, etc. all happens in the same file.

Now, when a Qt application is launched with the QT_QPA_EGLFS_KMS_CONFIG environment variable pointing to our file, Qt will know that the display connected to the first HDMI port has a touchscreen as well that shows up at /dev/input/event5. Hence any touch event from that device will get correctly associated with the screen in question.

Qt on the DRIVE CX

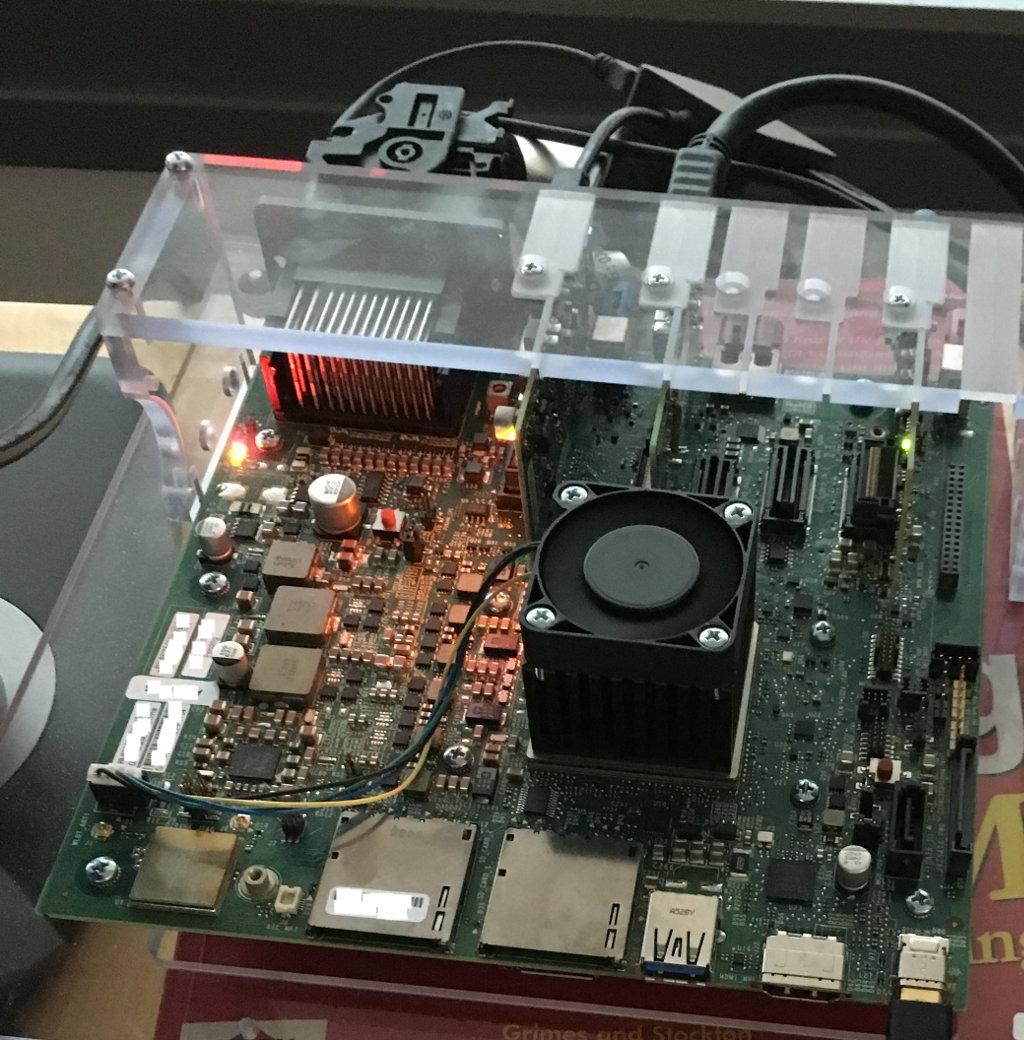

Let's see something in action. In the following example we will use an NVIDIA DRIVE CX board, with two monitors connected via HDMI and DisplayPort. The software stack is the default Vibrante Linux image, with Qt 5.8 deployed on top. Qt applications run with the eglfs platform plugin and its eglfs_kms_egldevice backend.

Our little test environment looks like this:

This already looks impressive, and not just because we found such good use for the Windows 95, MFC, ActiveX and COM books hanging around in the office from previous decades. The two monitors on the sides are showing a Qt Quick application that apparently picks up both screens automatically and can drive both at the same time. Excellent.

The application we are using is available here. It follows the standard multi-display application model for embedded (eglfs): creating a dedicated QQuickWindow (or QQuickView) on each of the available screens. For an example of this, check the code in the github repository, or take a look at the documentation pages that also have example code snippets.

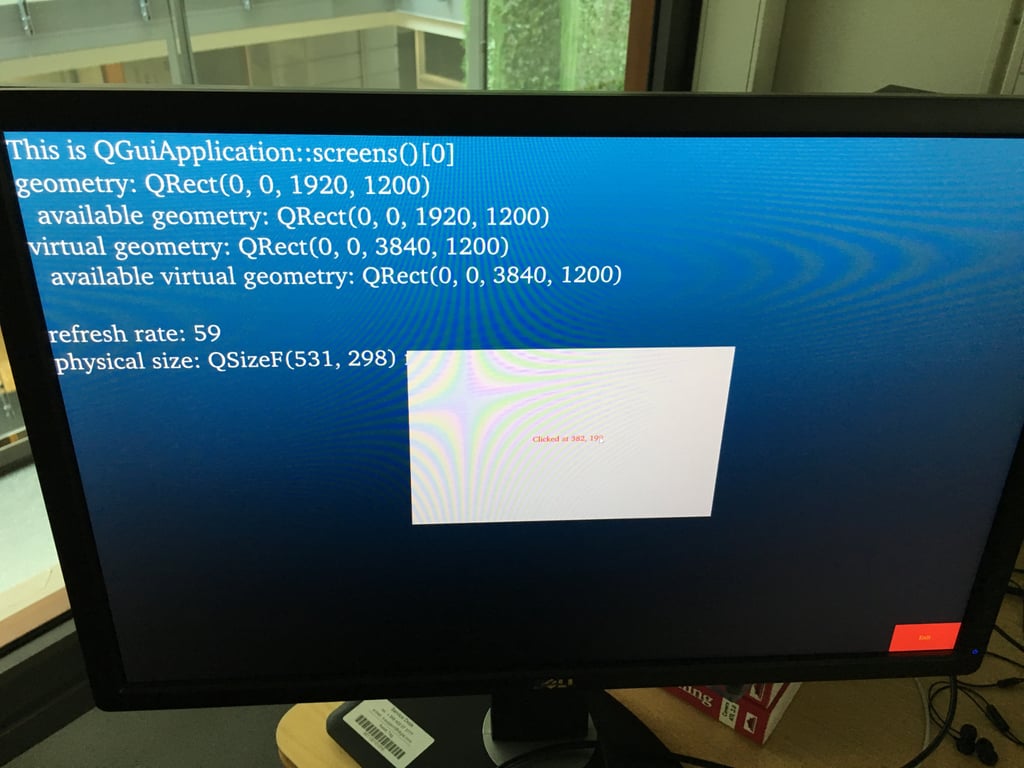

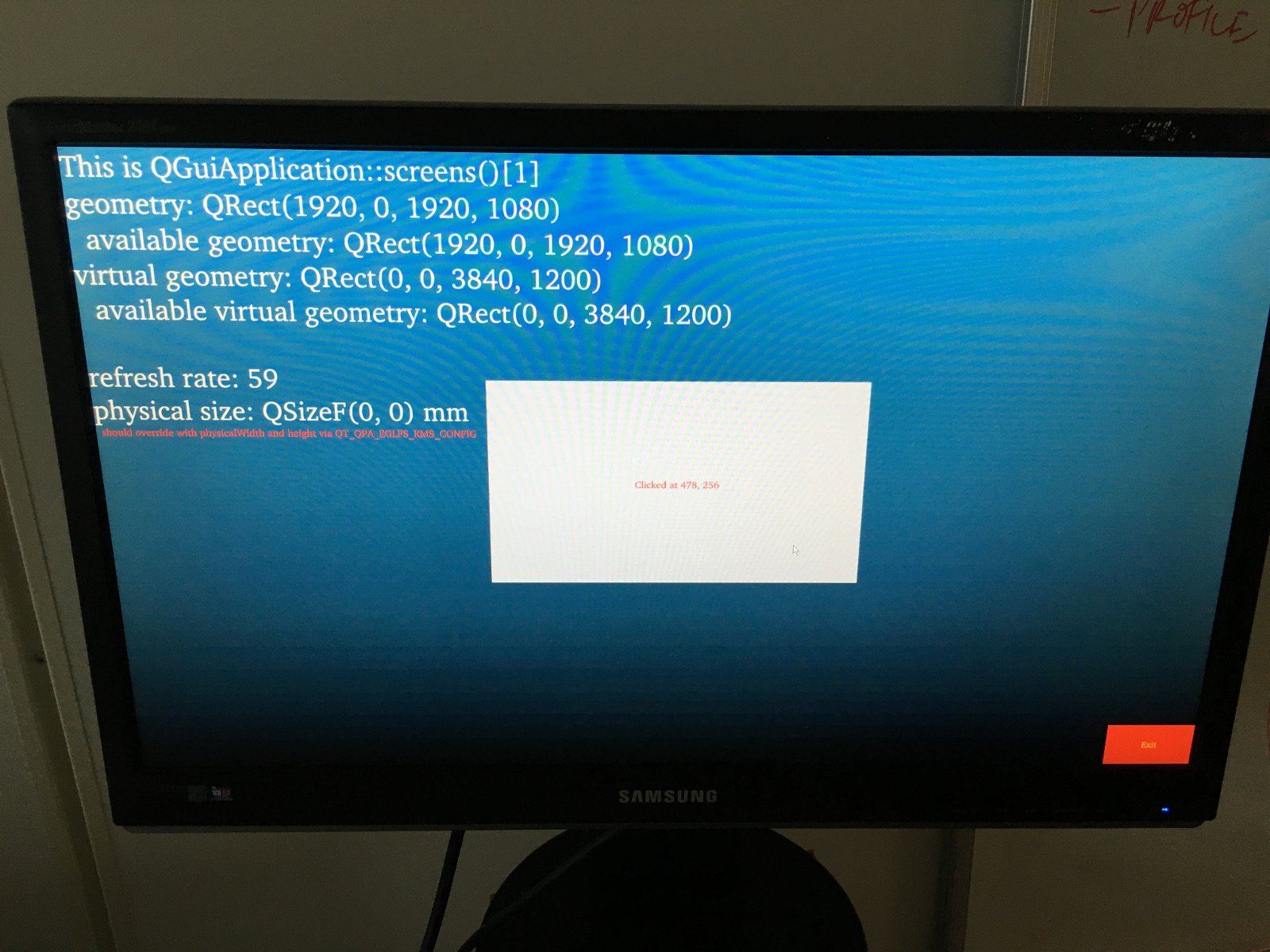

A closer look reveals our desktop configuration:

The gray MouseArea is used to test mouse and touch input handling. Hooking up a USB touch-enabled display immediately reveals the problems of pre-5.8 Qt versions: touching that area would only deliver events to it when the screen happened to be the first one. In Qt 5.8 this is can now be handled as described above.

It is important to understand the screen geometry concepts in QScreen. When the screens form a virtual desktop (which is the default for eglfs), the interpretation is the following:

- geometry() - the screen's position and size in the virtual desktop

- availableGeometry() - without a windowing system this is the same as geometry()

- virtualGeometry() - the geometry of the entire virtual desktop to which the screen belongs

- availableVirtualGeometry() - same as virtualGeometry()

- virtualSiblings() - the list of all screens belonging to the same virtual desktop

Configuration

How does the virtual desktop get formed? It may seem fairly random by default. In fact it simply follows the order DRM connectors are reported in. This is often not ideal. Fortunately, it is configurable starting with Qt 5.8. For instance, to ensure that the monitor on the first HDMI port gets a top-left position of (0, 0), we could add something like the following to the configuration file specified in QT_QPA_EGLFS_KMS_CONFIG:

{

"device": "drm-nvdc",

"outputs": [

{

"name": "HDMI1",

"virtualIndex": 0

},

{

"name": "DP1",

"virtualIndex": 1

}

]

}

If we wanted to create a vertical layout instead of horizontal (think an instrument cluster demo with three or more screens stacked under each other), we could have added:

{

"device": "drm-nvdc",

"virtualDesktopLayout": "vertical",

...

}

More complex layouts, for example a T-shaped setup with 4 screens, are also possible via the virtualPos property:

{

...

"outputs": [

{ "name": "HDMI1", "virtualIndex": 0 },

{ "name": "HDMI2", "virtualIndex": 1 },

{ "name": "DP1", "virtualIndex": 2 },

{ "name": "DP2", "virtualPos": "1920, 1080" }

]

}

Here the fourth screen's virtual position is specified explicitly.

In addition to virtualIndex and virtualPos, the other commonly used properties are mode, physicalWidth and physicalHeight. mode sets the desired mode for the screen and is typically a resolution, e.g. "1920x1080", but can also be set to "off", "current", or "preferred" (which is the default).

For example:

{

"device": "drm-nvdc",

"outputs": [

{

"name": "HDMI1",

"mode": "1024x768"

},

{

"name": "DP1",

"mode": "off"

}

]

}

The physical sizes of the displays become quite important when working with text and components from Qt Quick Controls. This is because these base size calculations on the logical DPI that is in turn based on the physical width and height. In desktop environments queries for these sizes usually work just fine, so no further actions are needed. On embedded however, it has often been necessary to provide the sizes in millimeters via the environment variables QT_QPA_EGLFS_PHYSICAL_WIDTH and QT_QPA_EGLFS_PHYSICAL_HEIGHT. This is not suitable in a multi-display environment, and therefore Qt 5.8 introduces an alternative: the physicalWidth and physicalHeight properties (values are in millimeters) in the JSON configuration file. As witnessed in the second screenshot above, the physical sizes did not get reported correctly in our demo setup. This can be corrected, as it was done for the monitor in the first screenshot, by doing something like:

{

"device": "drm-nvdc",

"outputs": [

{

"name": "HDMI1",

"physicalWidth": 531,

"physicalHeight": 298

},

...

]

}

As always, enabling logging can be a tremendous help for troubleshooting. There are a number of logging categories for eglfs, its backends and input, so the easiest is often to enable everything under qt.qpa by doing export QT_LOGGING_RULES=qt.qpa.*=true before starting a Qt application.

What About Wayland?

What about systems using multiple GUI processes and compositing them via a Qt-based Wayland compositor? Given that the compositor application still needs a platform plugin to run with, and that is typically eglfs, everything described above applies to most Wayland-based systems as well.

Once the displays are configured correctly, the compositor can create multiple QQuickWindow instances (QML scenes) targeting each of the connected screens. These can then be assigned to the corresponding WaylandOutput items. Check the multi output example for a simple compositor with multiple outputs.

The rest, meaning how the client applications' windows are placed, perhaps using the scenes on the different displays as one big virtual scene, moving client "windows" between screens, etc., are all in QtWayland's domain.

What's Missing and Future Plans

The QML side of screen management could benefit from some minor improvements: unlike C++, where QScreen, QWindow and QWindow::setScreen() are first class citizens, Qt Quick has currently no simple way to associate a Window with a QScreen, mainly because QScreen instances are only partially exposed to the QML world. While this is not fatal and can be worked around with some C++ code, as usual, the story here will have to be enhanced a bit.

Another missing feature is the ability to connect and disconnect screens at runtime. Currently such hotplugging is not supported by any of the backends. It is worth noting that with embedded systems the urgency is probably a lot lower than with ordinary desktop PCs or laptops, since the need to change screens in such a manner is less common. Nevertheless this is something that is on the roadmap for future releases.

That's it for now. As we know, more screens are better than one, so why not just let Qt power them all?

Blog Topics:

Comments

Subscribe to our newsletter

Subscribe Newsletter

Try Qt 6.8 Now!

Download the latest release here: www.qt.io/download.

Qt 6.8 release focuses on technology trends like spatial computing & XR, complex data visualization in 2D & 3D, and ARM-based development for desktop.

We're Hiring

Check out all our open positions here and follow us on Instagram to see what it's like to be #QtPeople.

Nice article. Along with managing multi screens I would like to see something written about using multiple applications, what are the best practices. I think it's been the norm to just have one giant application running in something like a Boot to Qt. But as applications get more sophisticated it seems silly load so many resources at one time. For instance if there is one part of your app that needs the web component but it is seldom used why do you want those resources loaded every time at start up. Sure there are ways to dynamically load and unload shared libraries from within your code, but this some what of a burden.

A small window manager would be ideal for managing multiple applications. Perhaps there are already ways to do this with something like the LXQt desktop. The trouble here might be that it's made for a traditional desktop and the look and feel as well as work flow cannot be easily modified.

Anther solution might be to use Android, but this seems very heavy handed approach.

I would think that some kind of desktop manager must be used for your automotive customers. If they are offering a gauge display would they want to put this screen at risk because of a bug in something like a view photos display.

Sorry for such a long and some what off topic post. I am hoping others will add comments concerning their needs and solutions for managing multi apps.

Yes, that's where Wayland and the Qt Wayland Compositor framework comes into the picture. Once you have a need for showing the output of multiple gui processes, instead of the basic one-fullscreen-gui-process model, switch over to the one compositor + multiple client apps model. See this post http://www.qt.io/blog/2016/... and the first screenshot.

Of course, the choice is not always obvious - many devices are fine and better off with the single app model due its simplicity and higher performance. The multi-process route is better suited for more complex systems, possibly with 3rd party apps involved, etc.

In the end most of the stuff in this post is relevant in either case: even in the multi-process model you have to have a way to render something in the compositor application (if it is Qt-based). In practice that will mean using the eglfs platform plugin for the compositor and the wayland plugin for all other (client) apps.

And since you mentioned automotive: yes, for typical IVI cases multi-process is the way to go. And in case of the Qt Automotive Suite ( https://doc.qt.io/QtAutomot... ) that's exactly what you'll get: the "application manager" ( https://doc.qt.io/QtApplica... ) is in fact a Qt-based Wayland compositor implemented with the Qt Wayland Compositor fw.

Interesting article. One thing I noticed is that users of Raspberry Pi and similar easy to use boards tend to use the board like a desktop and expect it to work like a real desktop systems. With the rise of Qt Quick applications this is a problem because as far as I know it it not possible to run Qt Quick with the system window manager (i.e. showing multiple windows with Xcb instead of eglfs). Also the interaction between the windows (copy and post, dragging, ...) is not possible.

Another problem are remote desktops (VNC) and Qt Quick (but here the new software renderer helps).

As for remoting and other special cases, the integrated 'software' Qt Quick backend in 5.8 will help a lot.

Regarding the Raspberry Pi, very true. Unfortunately the typical use cases of that board is very different from what one expects in the device creation context. (even though the RPi + eglfs is a very capable combination, once one strips out all the unnecessary X11 stuff from distros like Raspbian - or generates a more targeted image/sdk via Yocto or similar)

Somewhat offtopic: As for xcb on the Pi, the traditional problem is the lack of accelerated graphics (EGL+OpenGL) under X. The Broadcom drivers only do EGL/GLES on top of Dispmanx, the proprietary composition API. This is fine for device creation and eglfs, not so much for the traditional desktop stuff. Raspbian's and others' attempts to "solve" this by using the software rasterizer of Mesa only creates an unholy mess (due to having two EGL/GLES stacks...), and is not really suitable for proper graphics anyhow. Long-term the pain may be lessened by having actual acceleration working in Mesa (see VC4), although it is unclear what that would mean for multimedia (like accelerated video) and friends, which are currently handled well by the Broadcom stack. For the time being the best option for Qt Quick under X11 on the Pi is probably to use Qt 5.8 with the new software backend (it will likely perform better than doing full GL via an sw rasterizer).

Any answer why FastUIDraw is faster then Qt scenegraph ?

https://www.x.org/wiki/Even...

https://www.x.org/wiki/Even...

Anybody did contact Kevin Rogovin for benchmart source codes?

https://www.youtube.com/wat...

The benchmark sources are in git. The tests are against Qt 4.x (4.8 probably, which would mean a framework from 2011 with a deprecated UI rendering paradigm...) and QPainter with the raster, GL and presumably the X11 native backend. It has no relevance to the scenegraph or Qt Quick in Qt 5.

His target is scenegraph for web/UI rendering

https://github.com/01org/fa...

so that is reason why he use QPainter.

btw I really miss this canvas style api in QML as Canvas target is always QImage :( so it isn't vector rendering at all